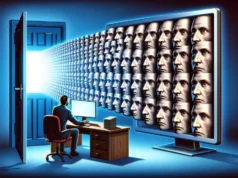

In a world increasingly digitized and data-driven, the question of who truly owns one’s digital identity, particularly biometric data, is a circle of fiery debate that draws in tech giants, policy wonks, corporate entities, ethicists, and importantly, the consumers. Central to this debate is an unsettling paradox: while biometric data offers innumerable benefits that can define the future of security and personalization, it carries an inherent risk of misuse, discrimination, and privacy erosion that cannot be overlooked.

Biometric data essentially refers to digitally stored personal information based on our physical and behavioral characteristics. This could include fingerprints, voice samples, iris scans, even behavior patterns. The ease and security this brings, particularly through personalized consumer experiences and anti-fraud protections, has led to a surge in biometric data collection, storage, and usage. Yet, the ethical implications of such data collection remain largely unclear, raising vexing questions about the ownership, consent, privacy, security, and fairness bound to this critical mass of data.

From smartphones that unlock with facial recognition to voice-activated home assistants to tomorrow’s contactless payment systems, biometric data is seeping into the architecture of everyday life. However, most users are unaware of the scale and implications of this collection. Lack of transparency has fueled accusations towards tech giants such as Google, Amazon, and Facebook, who are frequently embroiled in lawsuits and subjected to scrutiny over data privacy and usage issues.

Twitter’s recent lawsuit from privacy-focused nonprofit, the Electronic Frontier Foundation (EFF), over allegations of unauthorized storage of face and fingerprint data, emphasizes the need to evaluate who indeed owns this data. At the heart of EFF’s argument is that biometric data is nontransferable and unquestionably belongs to the user.

Similarly, digital rights organizations like the Electronic Privacy Information Centre advocate for broad privacy protections that encompass biometric data. In a defining statement, EPIC declared, “Biometric identifiers are by nature unique and permanent characteristics of the individual. A breach of biometric data is irreparable. Unlike passwords, biometric templates cannot be modified.”

The stance of tech companies varies widely, reflecting the nascent nature of this debate. Many argue that consumers offer implied consent to share their data when leveraging personalized services, creating a trade-off between data privacy and consumer convenience. Yet, this argument is being increasingly traversed by legal challenges and regulatory changes.

Ever since the European Union’s General Data Protection Regulation (GDPR) rolled out, citizens now have explicit rights over their data, including biometric information. Other jurisdictions, such as California and Illinois, have implemented laws to protect biometric information, establishing more control for consumers over their digital identifiers. These laws equip consumers with the legal rights to know what’s being collected, why, and what’s done with that data, creating clearer consent around its use.

But legislation remains a fraction of the larger solution. How private corporations and public organizations interpret these laws and enforce safeguards is critical. Biometric systems are especially susceptible to cybersecurity threats due to the immutable nature of biometric data —once compromised, biometric data, unlike passwords, can’t be changed.

Additionally, there are concerns about equity and discrimination. An MIT Media Lab study found facial recognition software from IBM and Microsoft misidentified darker-skinned and female faces at higher rates. Hence, the ethical consideration transcends who owns the data but touches on how it’s used (and potentially misused).

The debate surrounding the rightful ownership of biometric data underscores a critical juncture in the crossroads of technology and ethics. While clear laws and guidelines can help delineate ownership boundaries, a broader cultural shift towards transparency and responsible use needs to echo through the tech industry.

Ultimately, the crux of this ethical debate hinges upon creating a balance between technological advancement and data protection. This may differ based on legal and cultural frameworks, yet at the center, it involves empowering the consumer with rightful control and information over their digital identities.

Sources:

1. “EFF Sues Twitter Over Alleged Biometric Data Breach.” EFF. Retrieved from https://www.eff.org/

2. “Facial Recognition Tech Discriminates.” MIT Media Lab. Retrieved from http://news.mit.edu/2018/study-finds-gender-skin-type-bias-artificial-intelligence-systems-0212

3. “Privacy and Biometric Data.” EPIC. Retrieved from https://epic.org/privacy/biometrics/

4. “Guide to the General Data Protection Regulation (GDPR).” European Commission. Retrieved from https://ec.europa.eu/info/law/law-topic/data-protection/data-protection-eu_en